#include "Max6675.h"

Max6675 ts(8, 9, 10);

// Max6675 module: SO on pin #8, SS on pin #9, CSK on pin #10 of Arduino UNO

void setup()

{

ts.setOffset(0);

// set offset for temperature measurement.

Serial.begin(9600);

}

void loop()

{

Serial.print(ts.getCelsius(), 2);

Serial.print(" C / ");

Serial.print(ts.getFahrenheit(), 2);

Serial.print(" F / ");

Serial.print(ts.getKelvin(), 2);

Serial.print(" Kn");

Serial.println();

delay(2000);

}

Category Archives: source code

Minmax Tic Tac Toe

#!/usr/bin/env python3

from math import inf as infinity

from random import choice

import platform

import time

from os import system

HUMAN = -1

COMP = +1

board = [

[0, 0, 0],

[0, 0, 0],

[0, 0, 0],

]

def evaluate(state):

"""

Function to heuristic evaluation of state.

:param state: the state of the current board

:return: +1 if the computer wins; -1 if the human wins; 0 draw

"""

if wins(state, COMP):

score = +1

elif wins(state, HUMAN):

score = -1

else:

score = 0

return score

def wins(state, player):

"""

This function tests if a specific player wins. Possibilities:

* Three rows [X X X] or [O O O]

* Three cols [X X X] or [O O O]

* Two diagonals [X X X] or [O O O]

:param state: the state of the current board

:param player: a human or a computer

:return: True if the player wins

"""

win_state = [

[state[0][0], state[0][1], state[0][2]],

[state[1][0], state[1][1], state[1][2]],

[state[2][0], state[2][1], state[2][2]],

[state[0][0], state[1][0], state[2][0]],

[state[0][1], state[1][1], state[2][1]],

[state[0][2], state[1][2], state[2][2]],

[state[0][0], state[1][1], state[2][2]],

[state[2][0], state[1][1], state[0][2]],

]

if [player, player, player] in win_state:

return True

else:

return False

def game_over(state):

"""

This function test if the human or computer wins

:param state: the state of the current board

:return: True if the human or computer wins

"""

return wins(state, HUMAN) or wins(state, COMP)

def empty_cells(state):

"""

Each empty cell will be added into cells' list

:param state: the state of the current board

:return: a list of empty cells

"""

cells = []

for x, row in enumerate(state):

for y, cell in enumerate(row):

if cell == 0:

cells.append([x, y])

return cells

def valid_move(x, y):

"""

A move is valid if the chosen cell is empty

:param x: X coordinate

:param y: Y coordinate

:return: True if the board[x][y] is empty

"""

if [x, y] in empty_cells(board):

return True

else:

return False

def set_move(x, y, player):

"""

Set the move on board, if the coordinates are valid

:param x: X coordinate

:param y: Y coordinate

:param player: the current player

"""

if valid_move(x, y):

board[x][y] = player

return True

else:

return False

def minimax(state, depth, player):

"""

AI function that choice the best move

:param state: current state of the board

:param depth: node index in the tree (0 <= depth <= 9),

but never nine in this case (see iaturn() function)

:param player: an human or a computer

:return: a list with [the best row, best col, best score]

"""

if player == COMP:

best = [-1, -1, -infinity]

else:

best = [-1, -1, +infinity]

if depth == 0 or game_over(state):

score = evaluate(state)

return [-1, -1, score]

for cell in empty_cells(state):

x, y = cell[0], cell[1]

state[x][y] = player

score = minimax(state, depth - 1, -player)

state[x][y] = 0

score[0], score[1] = x, y

if player == COMP:

if score[2] > best[2]:

best = score # max value

else:

if score[2] < best[2]:

best = score # min value

return best

def clean():

"""

Clears the console

"""

os_name = platform.system().lower()

if 'windows' in os_name:

system('cls')

else:

system('clear')

def render(state, c_choice, h_choice):

"""

Print the board on console

:param state: current state of the board

"""

chars = {

-1: h_choice,

+1: c_choice,

0: ' '

}

str_line = '---------------'

print('\n' + str_line)

for row in state:

for cell in row:

symbol = chars[cell]

print(f'| {symbol} |', end='')

print('\n' + str_line)

def ai_turn(c_choice, h_choice):

"""

It calls the minimax function if the depth < 9,

else it choices a random coordinate.

:param c_choice: computer's choice X or O

:param h_choice: human's choice X or O

:return:

"""

depth = len(empty_cells(board))

if depth == 0 or game_over(board):

return

clean()

print(f'Computer turn [{c_choice}]')

render(board, c_choice, h_choice)

if depth == 9:

x = choice([0, 1, 2])

y = choice([0, 1, 2])

else:

move = minimax(board, depth, COMP)

x, y = move[0], move[1]

set_move(x, y, COMP)

time.sleep(1)

def human_turn(c_choice, h_choice):

"""

The Human plays choosing a valid move.

:param c_choice: computer's choice X or O

:param h_choice: human's choice X or O

:return:

"""

depth = len(empty_cells(board))

if depth == 0 or game_over(board):

return

# Dictionary of valid moves

move = -1

moves = {

1: [0, 0], 2: [0, 1], 3: [0, 2],

4: [1, 0], 5: [1, 1], 6: [1, 2],

7: [2, 0], 8: [2, 1], 9: [2, 2],

}

clean()

print(f'Human turn [{h_choice}]')

render(board, c_choice, h_choice)

while move < 1 or move > 9:

try:

move = int(input('Use numpad (1..9): '))

coord = moves[move]

can_move = set_move(coord[0], coord[1], HUMAN)

if not can_move:

print('Bad move')

move = -1

except (EOFError, KeyboardInterrupt):

print('Bye')

exit()

except (KeyError, ValueError):

print('Bad choice')

def main():

"""

Main function that calls all functions

"""

clean()

h_choice = '' # X or O

c_choice = '' # X or O

first = '' # if human is the first

# Human chooses X or O to play

while h_choice != 'O' and h_choice != 'X':

try:

print('')

h_choice = input('Choose X or O\nChosen: ').upper()

except (EOFError, KeyboardInterrupt):

print('Bye')

exit()

except (KeyError, ValueError):

print('Bad choice')

# Setting computer's choice

if h_choice == 'X':

c_choice = 'O'

else:

c_choice = 'X'

# Human may starts first

clean()

while first != 'Y' and first != 'N':

try:

first = input('First to start?[y/n]: ').upper()

except (EOFError, KeyboardInterrupt):

print('Bye')

exit()

except (KeyError, ValueError):

print('Bad choice')

# Main loop of this game

while len(empty_cells(board)) > 0 and not game_over(board):

if first == 'N':

ai_turn(c_choice, h_choice)

first = ''

human_turn(c_choice, h_choice)

ai_turn(c_choice, h_choice)

# Game over message

if wins(board, HUMAN):

clean()

print(f'Human turn [{h_choice}]')

render(board, c_choice, h_choice)

print('YOU WIN!')

elif wins(board, COMP):

clean()

print(f'Computer turn [{c_choice}]')

render(board, c_choice, h_choice)

print('YOU LOSE!')

else:

clean()

render(board, c_choice, h_choice)

print('DRAW!')

exit()

main()

Naive Learning

import itertools

import time

import numpy as np

import cv2

from moviepy.editor import VideoClip

WORLD_HEIGHT = 4

WORLD_WIDTH = 4

WALL_FRAC = .2

NUM_WINS = 5

NUM_LOSE = 10

class GridWorld:

def __init__(self, world_height=3, world_width=4, discount_factor=.5, default_reward=-.5, wall_penalty=-.6,

win_reward=5., lose_reward=-10., viz=True, patch_side=120, grid_thickness=2, arrow_thickness=3,

wall_locs=[[1, 1], [1, 2]], win_locs=[[0, 3]], lose_locs=[[1, 3]], start_loc=[0, 0],

reset_prob=.2):

self.world = np.ones([world_height, world_width]) * default_reward

self.reset_prob = reset_prob

self.world_height = world_height

self.world_width = world_width

self.wall_penalty = wall_penalty

self.win_reward = win_reward

self.lose_reward = lose_reward

self.default_reward = default_reward

self.discount_factor = discount_factor

self.patch_side = patch_side

self.grid_thickness = grid_thickness

self.arrow_thickness = arrow_thickness

self.wall_locs = np.array(wall_locs)

self.win_locs = np.array(win_locs)

self.lose_locs = np.array(lose_locs)

self.at_terminal_state = False

self.auto_reset = True

self.random_respawn = True

self.step = 0

self.viz_canvas = None

self.viz = viz

self.path_color = (128, 128, 128)

self.wall_color = (0, 255, 0)

self.win_color = (0, 0, 255)

self.lose_color = (255, 0, 0)

self.world[self.wall_locs[:, 0], self.wall_locs[:, 1]] = self.wall_penalty

self.world[self.lose_locs[:, 0], self.lose_locs[:, 1]] = self.lose_reward

self.world[self.win_locs[:, 0], self.win_locs[:, 1]] = self.win_reward

spawn_condn = lambda loc: self.world[loc[0], loc[1]] == self.default_reward

self.spawn_locs = np.array([loc for loc in itertools.product(np.arange(self.world_height),

np.arange(self.world_width))

if spawn_condn(loc)])

self.start_state = np.array(start_loc)

self.bot_rc = None

self.reset()

self.actions = [self.up, self.left, self.right, self.down, self.noop]

self.action_labels = ['UP', 'LEFT', 'RIGHT', 'DOWN', 'NOOP']

self.q_values = np.ones([self.world.shape[0], self.world.shape[1], len(self.actions)]) * 1. / len(self.actions)

if self.viz:

self.init_grid_canvas()

self.video_out_fpath = 'shm_dqn_gridsolver-' + str(time.time()) + '.mp4'

self.clip = VideoClip(self.make_frame, duration=15)

def make_frame(self, t):

self.action()

frame = self.highlight_loc(self.viz_canvas, self.bot_rc[0], self.bot_rc[1])

return frame

def check_terminal_state(self):

if self.world[self.bot_rc[0], self.bot_rc[1]] == self.lose_reward \

or self.world[self.bot_rc[0], self.bot_rc[1]] == self.win_reward:

self.at_terminal_state = True

# print('------++++---- TERMINAL STATE ------++++----')

# if self.world[self.bot_rc[0], self.bot_rc[1]] == self.win_reward:

# print('GAME WON! :D')

# elif self.world[self.bot_rc[0], self.bot_rc[1]] == self.lose_reward:

# print('GAME LOST! :(')

if self.auto_reset:

self.reset()

def reset(self):

# print('Resetting')

if not self.random_respawn:

self.bot_rc = self.start_state.copy()

else:

self.bot_rc = self.spawn_locs[np.random.choice(np.arange(len(self.spawn_locs)))].copy()

self.at_terminal_state = False

def up(self):

action_idx = 0

# print(self.action_labels[action_idx])

new_r = self.bot_rc[0] - 1

if new_r < 0 or self.world[new_r, self.bot_rc[1]] == self.wall_penalty:

return self.wall_penalty, action_idx

self.bot_rc[0] = new_r

reward = self.world[self.bot_rc[0], self.bot_rc[1]]

self.check_terminal_state()

return reward, action_idx

def left(self):

action_idx = 1

# print(self.action_labels[action_idx])

new_c = self.bot_rc[1] - 1

if new_c < 0 or self.world[self.bot_rc[0], new_c] == self.wall_penalty:

return self.wall_penalty, action_idx

self.bot_rc[1] = new_c

reward = self.world[self.bot_rc[0], self.bot_rc[1]]

self.check_terminal_state()

return reward, action_idx

def right(self):

action_idx = 2

# print(self.action_labels[action_idx])

new_c = self.bot_rc[1] + 1

if new_c >= self.world.shape[1] or self.world[self.bot_rc[0], new_c] == self.wall_penalty:

return self.wall_penalty, action_idx

self.bot_rc[1] = new_c

reward = self.world[self.bot_rc[0], self.bot_rc[1]]

self.check_terminal_state()

return reward, action_idx

def down(self):

action_idx = 3

# print(self.action_labels[action_idx])

new_r = self.bot_rc[0] + 1

if new_r >= self.world.shape[0] or self.world[new_r, self.bot_rc[1]] == self.wall_penalty:

return self.wall_penalty, action_idx

self.bot_rc[0] = new_r

reward = self.world[self.bot_rc[0], self.bot_rc[1]]

self.check_terminal_state()

return reward, action_idx

def noop(self):

action_idx = 4

# print(self.action_labels[action_idx])

reward = self.world[self.bot_rc[0], self.bot_rc[1]]

self.check_terminal_state()

return reward, action_idx

def qvals2probs(self, q_vals, epsilon=1e-4):

action_probs = q_vals - q_vals.min() + epsilon

action_probs = action_probs / action_probs.sum()

return action_probs

def action(self):

# print('================ ACTION =================')

if self.at_terminal_state:

print('At terminal state, please call reset()')

exit()

# print('Start position:', self.bot_rc)

start_bot_rc = self.bot_rc[0], self.bot_rc[1]

q_vals = self.q_values[self.bot_rc[0], self.bot_rc[1]]

action_probs = self.qvals2probs(q_vals)

reward, action_idx = np.random.choice(self.actions, p=action_probs)()

# print('End position:', self.bot_rc)

# print('Reward:', reward)

alpha = np.exp(-self.step / 10e9)

self.step += 1

qv = (1 - alpha) * q_vals[action_idx] + alpha * (reward + self.discount_factor

* self.q_values[self.bot_rc[0], self.bot_rc[1]].max())

self.q_values[start_bot_rc[0], start_bot_rc[1], action_idx] = qv

if self.viz:

self.update_viz(start_bot_rc[0], start_bot_rc[1])

if np.random.rand() < self.reset_prob:

# print('-----> Randomly resetting to a random spawn point with probability', self.reset_prob)

self.reset()

def highlight_loc(self, viz_in, i, j):

starty = i * (self.patch_side + self.grid_thickness)

endy = starty + self.patch_side

startx = j * (self.patch_side + self.grid_thickness)

endx = startx + self.patch_side

viz = viz_in.copy()

cv2.rectangle(viz, (startx, starty), (endx, endy), (255, 255, 255), thickness=self.grid_thickness)

return viz

def update_viz(self, i, j):

starty = i * (self.patch_side + self.grid_thickness)

endy = starty + self.patch_side

startx = j * (self.patch_side + self.grid_thickness)

endx = startx + self.patch_side

patch = np.zeros([self.patch_side, self.patch_side, 3]).astype(np.uint8)

if self.world[i, j] == self.default_reward:

patch[:, :, :] = self.path_color

elif self.world[i, j] == self.wall_penalty:

patch[:, :, :] = self.wall_color

elif self.world[i, j] == self.win_reward:

patch[:, :, :] = self.win_color

elif self.world[i, j] == self.lose_reward:

patch[:, :, :] = self.lose_color

if self.world[i, j] == self.default_reward:

action_probs = self.qvals2probs(self.q_values[i, j])

x_component = action_probs[2] - action_probs[1]

y_component = action_probs[0] - action_probs[3]

magnitude = 1. - action_probs[-1]

s = self.patch_side // 2

x_patch = int(s * x_component)

y_patch = int(s * y_component)

arrow_canvas = np.zeros_like(patch)

vx = s + x_patch

vy = s - y_patch

cv2.arrowedLine(arrow_canvas, (s, s), (vx, vy), (255, 255, 255), thickness=self.arrow_thickness,

tipLength=0.5)

gridbox = (magnitude * arrow_canvas + (1 - magnitude) * patch).astype(np.uint8)

self.viz_canvas[starty:endy, startx:endx] = gridbox

else:

self.viz_canvas[starty:endy, startx:endx] = patch

def init_grid_canvas(self):

org_h, org_w = self.world_height, self.world_width

viz_w = (self.patch_side * org_w) + (self.grid_thickness * (org_w - 1))

viz_h = (self.patch_side * org_h) + (self.grid_thickness * (org_h - 1))

self.viz_canvas = np.zeros([viz_h, viz_w, 3]).astype(np.uint8)

for i in range(org_h):

for j in range(org_w):

self.update_viz(i, j)

def solve(self):

if not self.viz:

while True:

self.action()

else:

self.clip.write_videofile(self.video_out_fpath, fps=460)

def gen_world_config(h, w, wall_frac=.5, num_wins=2, num_lose=3):

n = h * w

num_wall_blocks = int(wall_frac * n)

wall_locs = (np.random.rand(num_wall_blocks, 2) * [h, w]).astype(np.int)

win_locs = (np.random.rand(num_wins, 2) * [h, w]).astype(np.int)

lose_locs = (np.random.rand(num_lose, 2) * [h, w]).astype(np.int)

return wall_locs, win_locs, lose_locs

if __name__ == '__main__':

wall_locs, win_locs, lose_locs = gen_world_config(WORLD_HEIGHT, WORLD_WIDTH, WALL_FRAC, NUM_WINS, NUM_LOSE)

g = GridWorld(world_height=WORLD_HEIGHT, world_width=WORLD_WIDTH,

wall_locs=wall_locs, win_locs=win_locs, lose_locs=lose_locs, viz=True)

g.solve()

k = 0

Javascript Tetris – A Simple Implementation

<!DOCTYPE html>

<html lang='en'>

<head>

<meta charset='UTF-8'>

<style>

canvas {

position: absolute;

top: 45%;

left: 50%;

width: 640px;

height: 640px;

margin: -320px 0 0 -320px;

}

</style>

</head>

<body>

<canvas></canvas>

<script>

'use strict';

var canvas = document.querySelector('canvas');

canvas.width = 640;

canvas.height = 640;

var g = canvas.getContext('2d');

var right = { x: 1, y: 0 };

var down = { x: 0, y: 1 };

var left = { x: -1, y: 0 };

var EMPTY = -1;

var BORDER = -2;

var fallingShape;

var nextShape;

var dim = 640;

var nRows = 18;

var nCols = 12;

var blockSize = 30;

var topMargin = 50;

var leftMargin = 20;

var scoreX = 400;

var scoreY = 330;

var titleX = 130;

var titleY = 160;

var clickX = 120;

var clickY = 400;

var previewCenterX = 467;

var previewCenterY = 97;

var mainFont = 'bold 48px monospace';

var smallFont = 'bold 18px monospace';

var colors = ['green', 'red', 'blue', 'purple', 'orange', 'blueviolet', 'magenta'];

var gridRect = { x: 46, y: 47, w: 308, h: 517 };

var previewRect = { x: 387, y: 47, w: 200, h: 200 };

var titleRect = { x: 100, y: 95, w: 252, h: 100 };

var clickRect = { x: 50, y: 375, w: 252, h: 40 };

var outerRect = { x: 5, y: 5, w: 630, h: 630 };

var squareBorder = 'white';

var titlebgColor = 'white';

var textColor = 'black';

var bgColor = '#DDEEFF';

var gridColor = '#BECFEA';

var gridBorderColor = '#7788AA';

var largeStroke = 5;

var smallStroke = 2;

// position of falling shape

var fallingShapeRow;

var fallingShapeCol;

var keyDown = false;

var fastDown = false;

var grid = [];

var scoreboard = new Scoreboard();

addEventListener('keydown', function (event) {

if (!keyDown) {

keyDown = true;

if (scoreboard.isGameOver())

return;

switch (event.key) {

case 'w':

case 'ArrowUp':

if (canRotate(fallingShape))

rotate(fallingShape);

break;

case 'a':

case 'ArrowLeft':

if (canMove(fallingShape, left))

move(left);

break;

case 'd':

case 'ArrowRight':

if (canMove(fallingShape, right))

move(right);

break;

case 's':

case 'ArrowDown':

if (!fastDown) {

fastDown = true;

while (canMove(fallingShape, down)) {

move(down);

draw();

}

shapeHasLanded();

}

}

draw();

}

});

addEventListener('click', function () {

startNewGame();

});

addEventListener('keyup', function () {

keyDown = false;

fastDown = false;

});

function canRotate(s) {

if (s === Shapes.Square)

return false;

var pos = new Array(4);

for (var i = 0; i < pos.length; i++) {

pos[i] = s.pos[i].slice();

}

pos.forEach(function (row) {

var tmp = row[0];

row[0] = row[1];

row[1] = -tmp;

});

return pos.every(function (p) {

var newCol = fallingShapeCol + p[0];

var newRow = fallingShapeRow + p[1];

return grid[newRow][newCol] === EMPTY;

});

}

function rotate(s) {

if (s === Shapes.Square)

return;

s.pos.forEach(function (row) {

var tmp = row[0];

row[0] = row[1];

row[1] = -tmp;

});

}

function move(dir) {

fallingShapeRow += dir.y;

fallingShapeCol += dir.x;

}

function canMove(s, dir) {

return s.pos.every(function (p) {

var newCol = fallingShapeCol + dir.x + p[0];

var newRow = fallingShapeRow + dir.y + p[1];

return grid[newRow][newCol] === EMPTY;

});

}

function shapeHasLanded() {

addShape(fallingShape);

if (fallingShapeRow < 2) {

scoreboard.setGameOver();

scoreboard.setTopscore();

} else {

scoreboard.addLines(removeLines());

}

selectShape();

}

function removeLines() {

var count = 0;

for (var r = 0; r < nRows - 1; r++) {

for (var c = 1; c < nCols - 1; c++) {

if (grid[r][c] === EMPTY)

break;

if (c === nCols - 2) {

count++;

removeLine(r);

}

}

}

return count;

}

function removeLine(line) {

for (var c = 0; c < nCols; c++)

grid[line][c] = EMPTY;

for (var c = 0; c < nCols; c++) {

for (var r = line; r > 0; r--)

grid[r][c] = grid[r - 1][c];

}

}

function addShape(s) {

s.pos.forEach(function (p) {

grid[fallingShapeRow + p[1]][fallingShapeCol + p[0]] = s.ordinal;

});

}

function Shape(shape, o) {

this.shape = shape;

this.pos = this.reset();

this.ordinal = o;

}

var Shapes = {

ZShape: [[0, -1], [0, 0], [-1, 0], [-1, 1]],

SShape: [[0, -1], [0, 0], [1, 0], [1, 1]],

IShape: [[0, -1], [0, 0], [0, 1], [0, 2]],

TShape: [[-1, 0], [0, 0], [1, 0], [0, 1]],

Square: [[0, 0], [1, 0], [0, 1], [1, 1]],

LShape: [[-1, -1], [0, -1], [0, 0], [0, 1]],

JShape: [[1, -1], [0, -1], [0, 0], [0, 1]]

};

function getRandomShape() {

var keys = Object.keys(Shapes);

var ord = Math.floor(Math.random() * keys.length);

var shape = Shapes[keys[ord]];

return new Shape(shape, ord);

}

Shape.prototype.reset = function () {

this.pos = new Array(4);

for (var i = 0; i < this.pos.length; i++) {

this.pos[i] = this.shape[i].slice();

}

return this.pos;

}

function selectShape() {

fallingShapeRow = 1;

fallingShapeCol = 5;

fallingShape = nextShape;

nextShape = getRandomShape();

if (fallingShape != null) {

fallingShape.reset();

}

}

function Scoreboard() {

this.MAXLEVEL = 9;

var level = 0;

var lines = 0;

var score = 0;

var topscore = 0;

var gameOver = true;

this.reset = function () {

this.setTopscore();

level = lines = score = 0;

gameOver = false;

}

this.setGameOver = function () {

gameOver = true;

}

this.isGameOver = function () {

return gameOver;

}

this.setTopscore = function () {

if (score > topscore) {

topscore = score;

}

}

this.getTopscore = function () {

return topscore;

}

this.getSpeed = function () {

switch (level) {

case 0: return 700;

case 1: return 600;

case 2: return 500;

case 3: return 400;

case 4: return 350;

case 5: return 300;

case 6: return 250;

case 7: return 200;

case 8: return 150;

case 9: return 100;

default: return 100;

}

}

this.addScore = function (sc) {

score += sc;

}

this.addLines = function (line) {

switch (line) {

case 1:

this.addScore(10);

break;

case 2:

this.addScore(20);

break;

case 3:

this.addScore(30);

break;

case 4:

this.addScore(40);

break;

default:

return;

}

lines += line;

if (lines > 10) {

this.addLevel();

}

}

this.addLevel = function () {

lines %= 10;

if (level < this.MAXLEVEL) {

level++;

}

}

this.getLevel = function () {

return level;

}

this.getLines = function () {

return lines;

}

this.getScore = function () {

return score;

}

}

function draw() {

g.clearRect(0, 0, canvas.width, canvas.height);

drawUI();

if (scoreboard.isGameOver()) {

drawStartScreen();

} else {

drawFallingShape();

}

}

function drawStartScreen() {

g.font = mainFont;

fillRect(titleRect, titlebgColor);

fillRect(clickRect, titlebgColor);

g.fillStyle = textColor;

g.fillText('Tetris', titleX, titleY);

g.font = smallFont;

g.fillText('click to start', clickX, clickY);

}

function fillRect(r, color) {

g.fillStyle = color;

g.fillRect(r.x, r.y, r.w, r.h);

}

function drawRect(r, color) {

g.strokeStyle = color;

g.strokeRect(r.x, r.y, r.w, r.h);

}

function drawSquare(colorIndex, r, c) {

var bs = blockSize;

g.fillStyle = colors[colorIndex];

g.fillRect(leftMargin + c * bs, topMargin + r * bs, bs, bs);

g.lineWidth = smallStroke;

g.strokeStyle = squareBorder;

g.strokeRect(leftMargin + c * bs, topMargin + r * bs, bs, bs);

}

function drawUI() {

// background

fillRect(outerRect, bgColor);

fillRect(gridRect, gridColor);

// the blocks dropped in the grid

for (var r = 0; r < nRows; r++) {

for (var c = 0; c < nCols; c++) {

var idx = grid[r][c];

if (idx > EMPTY)

drawSquare(idx, r, c);

}

}

// the borders of grid and preview panel

g.lineWidth = largeStroke;

drawRect(gridRect, gridBorderColor);

drawRect(previewRect, gridBorderColor);

drawRect(outerRect, gridBorderColor);

// scoreboard

g.fillStyle = textColor;

g.font = smallFont;

g.fillText('hiscore ' + scoreboard.getTopscore(), scoreX, scoreY);

g.fillText('level ' + scoreboard.getLevel(), scoreX, scoreY + 30);

g.fillText('lines ' + scoreboard.getLines(), scoreX, scoreY + 60);

g.fillText('score ' + scoreboard.getScore(), scoreX, scoreY + 90);

// preview

var minX = 5, minY = 5, maxX = 0, maxY = 0;

nextShape.pos.forEach(function (p) {

minX = Math.min(minX, p[0]);

minY = Math.min(minY, p[1]);

maxX = Math.max(maxX, p[0]);

maxY = Math.max(maxY, p[1]);

});

var cx = previewCenterX - ((minX + maxX + 1) / 2.0 * blockSize);

var cy = previewCenterY - ((minY + maxY + 1) / 2.0 * blockSize);

g.translate(cx, cy);

nextShape.shape.forEach(function (p) {

drawSquare(nextShape.ordinal, p[1], p[0]);

});

g.translate(-cx, -cy);

}

function drawFallingShape() {

var idx = fallingShape.ordinal;

fallingShape.pos.forEach(function (p) {

drawSquare(idx, fallingShapeRow + p[1], fallingShapeCol + p[0]);

});

}

function animate(lastFrameTime) {

var requestId = requestAnimationFrame(function () {

animate(lastFrameTime);

});

var time = new Date().getTime();

var delay = scoreboard.getSpeed();

if (lastFrameTime + delay < time) {

if (!scoreboard.isGameOver()) {

if (canMove(fallingShape, down)) {

move(down);

} else {

shapeHasLanded();

}

draw();

lastFrameTime = time;

} else {

cancelAnimationFrame(requestId);

}

}

}

function startNewGame() {

initGrid();

selectShape();

scoreboard.reset();

animate(-1);

}

function initGrid() {

function fill(arr, value) {

for (var i = 0; i < arr.length; i++) {

arr[i] = value;

}

}

for (var r = 0; r < nRows; r++) {

grid[r] = new Array(nCols);

fill(grid[r], EMPTY);

for (var c = 0; c < nCols; c++) {

if (c === 0 || c === nCols - 1 || r === nRows - 1)

grid[r][c] = BORDER;

}

}

}

function init() {

initGrid();

selectShape();

draw();

}

init();

</script>

</body>

</html>

Calculating the nth Digit of Pi

Blob Game with Collision Detection

<!DOCTYPE html>

<html>

<head>

<style>

body {background-color: Plum;}

canvas{ border: 2px solid black;}

</style>

</head>

<body>

<img id="link1" src="link1 (2).png" alt="Link1" style="display:none">

<img id="link2" src="link2 (2).png" alt="Link2" style="display:none">

<img id="grass1" src="grass1.png" alt="Link2" style="display:none">

<img id="grass2" src="grass2.png" alt="Link2" style="display:none">

<img id="grass3" src="grass3.png" alt="Link2" style="display:none">

<img id="grass4" src="grass4.png" alt="Link2" style="display:none">

<img id="rock1" src="rock1.png" alt="Link2" style="display:none">

<canvas id = "game"></canvas>

<script>

var Key = {

_pressed: {},

LEFT: 37,

UP: 38,

RIGHT: 39,

DOWN: 40,

isDown: function(keyCode){

return this._pressed[keyCode];

},

onKeydown: function(event) {

this._pressed[event.keyCode] = true;

},

onKeyup: function(event) {

delete this._pressed[event.keyCode];

}

};

window.addEventListener('keyup', function(event) {Key.onKeyup(event); }, false);

window.addEventListener('keydown', function(event) {Key.onKeydown(event); }, false);

var OBSTACLES = [

[0,0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,1,1,0,0,0,0,0,0,0,0,0,0],

[0,1,0,1,0,0,1,0,0,0,0,0,0,0],

[0,1,1,1,0,1,0,1,0,0,0,0,0,0],

[0,1,0,1,0,1,1,1,0,0,0,0,0,0],

[0,1,1,1,0,1,0,1,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0,0],

[0,0,0,0,0,0,0,0,0,0,0,0,0,1]

];

var canvas = document.getElementById('game');

canvas.width = 1400; //window.innerWidth;

canvas.height =900; //window.innerHeight;

var grid = 100;

var player_width = document.getElementById("link1").width;

var player_height = document.getElementById("link1").height;

//var player_width = document.getElementById("rock1").width;

//var player_height = document.getElementById("rock1").height;

console.log(player_width, player_height);

function isCollidedOb(x,y,width,height,obarray){

upperleft = [[Math.floor(y/grid)],[Math.floor(x/grid)]];

lowerleft = [[Math.floor((y+height)/grid)],[Math.floor((x)/grid)]];

upperright = [[Math.floor((y)/grid)],[Math.floor((x+width)/grid)]];

lowerright = [[Math.floor((y+height)/grid)],[Math.floor((x+width)/grid)]];

console.log(upperleft[0],lowerleft,upperright[0],lowerright);

if(obarray[upperleft[0]][upperleft[1]] || obarray[lowerleft[0]][lowerleft[1]] || obarray[upperright[0]][upperright[1]] || obarray[lowerright[0]][lowerright[1]]){

return true;

}

return false;

}

function drawObs(obarray,grid){

for (let i = 0; i < obarray.length; i++) {

for (let j = 0; j < obarray[i].length; j++) {

if (obarray[i][j]){

ctx.drawImage(rock1,j*grid,i*grid);

}

}

}

}

var x = 50;

var y = 50;

var toggle = 1;

var grass1 = document.getElementById("grass1");

var rock1 = document.getElementById("rock1");

var ctx = canvas.getContext('2d');

var goalX = Math.random() * window.innerWidth;

var goalY = Math.random() * window.innerHeight;

var playerSize = 50;

var goalSize = 15;

var speed = 3;

function draw() {

ctx.clearRect(0, 0, canvas.width, canvas.height);

ctx.fillStyle = "rgb(24, 255, 0)";

ctx.fillRect(0, 0, canvas.width, canvas.height);

drawObs(OBSTACLES,grid);

ctx.drawImage(grass1, 200, 200);

//MOVE UP

if(Key.isDown(Key.UP)){

toggle += 1;

if(y < 0){

y= canvas.height-player_height;

}

if(!isCollidedOb(x,Math.abs(y-speed),player_width,player_height,OBSTACLES)){

y-=speed;

}

}

//MOVE DOWN

if(Key.isDown(Key.DOWN)){

toggle += 1;

if(y > canvas.height){

y= y%canvas.height;

}

if(!isCollidedOb(x,y+speed,player_width,player_height,OBSTACLES)){

y+=speed;

}

}

//MOVE LEFT

if(Key.isDown(Key.LEFT)){

toggle += 1;

if(x < 0){

x = canvas.width-player_width;

}

if(!isCollidedOb(x-speed,y,player_width,player_height,OBSTACLES)){

x-=speed;

}

}

//MOVE RIGHT

if(Key.isDown(Key.RIGHT)){

toggle += 1;

if(x > canvas.width){

x= x%canvas.width;

}

if(!isCollidedOb(x+speed,y,player_width,player_height,OBSTACLES)){

x+=speed;

}

}

if ((Math.abs(x-goalX))**2 + (Math.abs(y-goalY))**2 < (playerSize+goalSize)**2){

//playerSize += 5;

goalX = Math.random() * canvas.width;

goalY = Math.random() * canvas.height;

}

//var character = new Path2D();

////////////////////////////////////////////////////////////////////

//var character = new Image();

//toggle += 1;

toggle %= 100;

if(toggle > 50){

var character = document.getElementById("link1");

//character.src = document.getElementById("link1");

} else {

//character.src = document.getElementById("link2");}

var character = document.getElementById("link2");}

//character.addEventListener('load', function() {

// execute drawImage statements here

ctx.drawImage(character, x, y);

var goal = new Path2D();

goal.arc(goalX, goalY, goalSize, 0, 2 * Math.PI);

ctx.fillStyle = "#FF0000"

//ctx.fill(character);

ctx.fill(goal)

}

setInterval(draw, 10);

</script>

</body>

</html>

Arithmetic and Geometric Series/Sequence Formulas

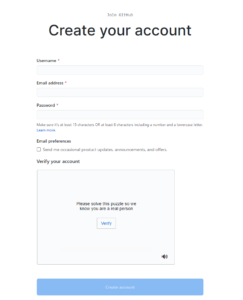

Setting Up Your Own GitHub and GitHub Page

The first thing we will be dong today is setting up individual GitHub Accounts. GitHub is the world’s biggest repository website. A repository is a place where coding projects are stored and updated, allowing many people to collaborate on a large coding project.

Please go to github.com and click “Sign Up” in the upper right corner.

As your username please DO NOT use your real name.

Also, please keep in mind that your username will became the address of your website, so do not make it too long.

Next enter your IDEAL email address and a new password that is not the one you use for your IDEAL email. If you generally forget passwords, write this down on a piece of paper.

Next you will need to solve a small puzzle to “Verify your account” and prove that you are a person registering the account, not a bot.

On the next page, feel free to answer a reason that you are using Github. Your answers on this page can be whatever you choose.

When you see this page:

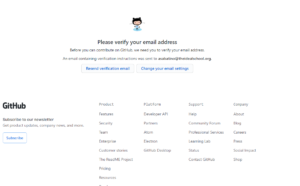

You will need to go to your IDEAL email and look for an email from Github.

When you find it, please click “Verify email address”

You will be taken to this page:

Please click “Create a repository”

The Repository name should MATCH YOUR GITHUB USERNAME EXACTLY, INCLUDING CAPITALIZATION WITH .GITHUB.IO. For example, if your username is Username123, your repository should be Username123.github.io This tells GitHub that you will be using this specific project for a website on GitHub Pages. It should say “You found a secret!” when you enter the right name for the repository. Also please make the repository Public.

On the next page, please click “Create new file” to start your project:

Name your file “index.html” and try the code example below:

Scroll to the bottom and click “Commit new file” to save your changes:

Now you can visit your very own website at www.USERNAME.github.io.

You can see my example at www.idealpoly.github.io

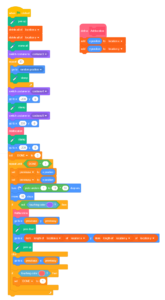

Pathfinding in Scratch

List of Pokemon for Scientific Reasons

Pokémon

Bulbasaur

Ivysaur

Venusaur

Charmander

Charmeleon

Charizard

Squirtle

Wartortle

Blastoise

Caterpie

Metapod

Butterfree

Weedle

Kakuna

Beedrill

Pidgey

Pidgeotto

Pidgeot

Rattata

Raticate

Spearow

Fearow

Ekans

Arbok

Pikachu

Raichu

Sandshrew

Sandslash

Nidoran♀

Nidorina

Nidoqueen

Nidoran♂

Nidorino

Nidoking

Clefairy

Clefable

Vulpix

Ninetales

Jigglypuff

Wigglytuff

Zubat

Golbat

Oddish

Gloom

Vileplume

Paras

Parasect

Venonat

Venomoth

Diglett

Dugtrio

Meowth

Persian

Psyduck

Golduck

Mankey

Primeape

Growlithe

Arcanine

Poliwag

Poliwhirl

Poliwrath

Abra

Kadabra

Alakazam

Machop

Machoke

Machamp

Bellsprout

Weepinbell

Victreebel

Tentacool

Tentacruel

Geodude

Graveler

Golem

Ponyta

Rapidash

Slowpoke

Slowbro

Magnemite

Magneton

Farfetch’d

Doduo

Dodrio

Seel

Dewgong

Grimer

Muk

Shellder

Cloyster

Gastly

Haunter

Gengar

Onix

Drowzee

Hypno

Krabby

Kingler

Voltorb

Electrode

Exeggcute

Exeggutor

Cubone

Marowak

Hitmonlee

Hitmonchan

Lickitung

Koffing

Weezing

Rhyhorn

Rhydon

Chansey

Tangela

Kangaskhan

Horsea

Seadra

Goldeen

Seaking

Staryu

Starmie

Mr. Mime

Scyther

Jynx

Electabuzz

Magmar

Pinsir

Tauros

Magikarp

Gyarados

Lapras

Ditto

Eevee

Vaporeon

Jolteon

Flareon

Porygon

Omanyte

Omastar

Kabuto

Kabutops

Aerodactyl

Snorlax

Articuno

Zapdos

Moltres

Dratini

Dragonair

Dragonite

Mewtwo

Mew

Chikorita

Bayleef

Meganium

Cyndaquil

Quilava

Typhlosion

Totodile

Croconaw

Feraligatr

Sentret

Furret

Hoothoot

Noctowl

Ledyba

Ledian

Spinarak

Ariados

Crobat

Chinchou

Lanturn

Pichu

Cleffa

Igglybuff

Togepi

Togetic

Natu

Xatu

Mareep

Flaaffy

Ampharos

Bellossom

Marill

Azumarill

Sudowoodo

Politoed

Hoppip

Skiploom

Jumpluff

Aipom

Sunkern

Sunflora

Yanma

Wooper

Quagsire

Espeon

Umbreon

Murkrow

Slowking

Misdreavus

Unown

Wobbuffet

Girafarig

Pineco

Forretress

Dunsparce

Gligar

Steelix

Snubbull

Granbull

Qwilfish

Scizor

Shuckle

Heracross

Sneasel

Teddiursa

Ursaring

Slugma

Magcargo

Swinub

Piloswine

Corsola

Remoraid

Octillery

Delibird

Mantine

Skarmory

Houndour

Houndoom

Kingdra

Phanpy

Donphan

Porygon2

Stantler

Smeargle

Tyrogue

Hitmontop

Smoochum

Elekid

Magby

Miltank

Blissey

Raikou

Entei

Suicune

Larvitar

Pupitar

Tyranitar

Lugia

Ho-Oh

Celebi

Treecko

Grovyle

Sceptile

Torchic

Combusken

Blaziken

Mudkip

Marshtomp

Swampert

Poochyena

Mightyena

Zigzagoon

Linoone

Wurmple

Silcoon

Beautifly

Cascoon

Dustox

Lotad

Lombre

Ludicolo

Seedot

Nuzleaf

Shiftry

Taillow

Swellow

Wingull

Pelipper

Ralts

Kirlia

Gardevoir

Surskit

Masquerain

Shroomish

Breloom

Slakoth

Vigoroth

Slaking

Nincada

Ninjask

Shedinja

Whismur

Loudred

Exploud

Makuhita

Hariyama

Azurill

Nosepass

Skitty

Delcatty

Sableye

Mawile

Aron

Lairon

Aggron

Meditite

Medicham

Electrike

Manectric

Plusle

Minun

Volbeat

Illumise

Roselia

Gulpin

Swalot

Carvanha

Sharpedo

Wailmer

Wailord

Numel

Camerupt

Torkoal

Spoink

Grumpig

Spinda

Trapinch

Vibrava

Flygon

Cacnea

Cacturne

Swablu

Altaria

Zangoose

Seviper

Lunatone

Solrock

Barboach

Whiscash

Corphish

Crawdaunt

Baltoy

Claydol

Lileep

Cradily

Anorith

Armaldo

Feebas

Milotic

Castform

Kecleon

Shuppet

Banette

Duskull

Dusclops

Tropius

Chimecho

Absol

Wynaut

Snorunt

Glalie

Spheal

Sealeo

Walrein

Clamperl

Huntail

Gorebyss

Relicanth

Luvdisc

Bagon

Shelgon

Salamence

Beldum

Metang

Metagross

Regirock

Regice

Registeel

Latias

Latios

Kyogre

Groudon

Rayquaza

Jirachi

Deoxys

Turtwig

Grotle

Torterra

Chimchar

Monferno

Infernape

Piplup

Prinplup

Empoleon

Starly

Staravia

Staraptor

Bidoof

Bibarel

Kricketot

Kricketune

Shinx

Luxio

Luxray

Budew

Roserade

Cranidos

Rampardos

Shieldon

Bastiodon

Burmy

Wormadam

Mothim

Combee

Vespiquen

Pachirisu

Buizel

Floatzel

Cherubi

Cherrim

Shellos

Gastrodon

Ambipom

Drifloon

Drifblim

Buneary

Lopunny

Mismagius

Honchkrow

Glameow

Purugly

Chingling

Stunky

Skuntank

Bronzor

Bronzong

Bonsly

Mime Jr.

Happiny

Chatot

Spiritomb

Gible

Gabite

Garchomp

Munchlax

Riolu

Lucario

Hippopotas

Hippowdon

Skorupi

Drapion

Croagunk

Toxicroak

Carnivine

Finneon

Lumineon

Mantyke

Snover

Abomasnow

Weavile

Magnezone

Lickilicky

Rhyperior

Tangrowth

Electivire

Magmortar

Togekiss

Yanmega

Leafeon

Glaceon

Gliscor

Mamoswine

Porygon-Z

Gallade

Probopass

Dusknoir

Froslass

Rotom

Uxie

Mesprit

Azelf

Dialga

Palkia

Heatran

Regigigas

Giratina

Cresselia

Phione

Manaphy

Darkrai

Shaymin

Arceus

Victini

Snivy

Servine

Serperior

Tepig

Pignite

Emboar

Oshawott

Dewott

Samurott

Patrat

Watchog

Lillipup

Herdier

Stoutland

Purrloin

Liepard

Pansage

Simisage

Pansear

Simisear

Panpour

Simipour

Munna

Musharna

Pidove

Tranquill

Unfezant

Blitzle

Zebstrika

Roggenrola

Boldore

Gigalith

Woobat

Swoobat

Drilbur

Excadrill

Audino

Timburr

Gurdurr

Conkeldurr

Tympole

Palpitoad

Seismitoad

Throh

Sawk

Sewaddle

Swadloon

Leavanny

Venipede

Whirlipede

Scolipede

Cottonee

Whimsicott

Petilil

Lilligant

Basculin

Sandile

Krokorok

Krookodile

Darumaka

Darmanitan

Maractus

Dwebble

Crustle

Scraggy

Scrafty

Sigilyph

Yamask

Cofagrigus

Tirtouga

Carracosta

Archen

Archeops

Trubbish

Garbodor

Zorua

Zoroark

Minccino

Cinccino

Gothita

Gothorita

Gothitelle

Solosis

Duosion

Reuniclus

Ducklett

Swanna

Vanillite

Vanillish

Vanilluxe

Deerling

Sawsbuck

Emolga

Karrablast

Escavalier

Foongus

Amoonguss

Frillish

Jellicent

Alomomola

Joltik

Galvantula

Ferroseed

Ferrothorn

Klink

Klang

Klinklang

Tynamo

Eelektrik

Eelektross

Elgyem

Beheeyem

Litwick

Lampent

Chandelure

Axew

Fraxure

Haxorus

Cubchoo

Beartic

Cryogonal

Shelmet

Accelgor

Stunfisk

Mienfoo

Mienshao

Druddigon

Golett

Golurk

Pawniard

Bisharp

Bouffalant

Rufflet

Braviary

Vullaby

Mandibuzz

Heatmor

Durant

Deino

Zweilous

Hydreigon

Larvesta

Volcarona

Cobalion

Terrakion

Virizion

Tornadus

Thundurus

Reshiram

Zekrom

Landorus

Kyurem

Keldeo

Meloetta

Genesect

Chespin

Quilladin

Chesnaught

Fennekin

Braixen

Delphox

Froakie

Frogadier

Greninja

Bunnelby

Diggersby

Fletchling

Fletchinder

Talonflame

Scatterbug

Spewpa

Vivillon

Litleo

Pyroar

Flabébé

Floette

Florges

Skiddo

Gogoat

Pancham

Pangoro

Furfrou

Espurr

Meowstic

Honedge

Doublade

Aegislash

Spritzee

Aromatisse

Swirlix

Slurpuff

Inkay

Malamar

Binacle

Barbaracle

Skrelp

Dragalge

Clauncher

Clawitzer

Helioptile

Heliolisk

Tyrunt

Tyrantrum

Amaura

Aurorus

Sylveon

Hawlucha

Dedenne

Carbink

Goomy

Sliggoo

Goodra

Klefki

Phantump

Trevenant

Pumpkaboo

Gourgeist

Bergmite

Avalugg

Noibat

Noivern

Xerneas

Yveltal

Zygarde

Diancie

| Hoopa (C) |

| Hoopa (U) |

| Volcanion |

| Rowlet |

| Dartrix |

| Decidueye |

| Litten |

| Torracat |

| Incineroar |

| Popplio |

| Brionne |

| Primarina |

| Pikipek |

| Trumbeak |

| Toucannon |

| Yungoos |

| Gumshoos |

| Grubbin |

| Charjabug |

| Vikavolt |

| Crabrawler |

| Crabominable |

| Oricorio |

| Cutiefly |

| Ribombee |

| Rockruff |

| Lycanroc |

| Wishiwashi |

| Mareanie |

| Toxapex |

| Mudbray |

| Mudsdale |

| Dewpider |

| Araquanid |

| Fomantis |

| Lurantis |

| Morelull |

| Shiinotic |

| Salandit |

| Salazzle |

| Stufful |

| Bewear |

| Bounsweet |

| Steenee |

| Tsareena |

| Comfey |

| Oranguru |

| Passimian |

| Wimpod |

| Golisopod |

| Sandygast |

| Palossand |

| Pyukumuku |

| Type: Null |

| Silvally |

| Minior |

| Komala |

| Turtonator |

| Togedemaru |

| Mimikyu |

| Bruxish |

| Drampa |

| Dhelmise |

| Jangmo-o |

| Hakamo-o |

| Kommo-o |

| Tapu Koko |

| Tapu Lele |

| Tapu Bulu |

| Tapu Fini |

| Cosmog |

| Cosmoem |

| Solgaleo |

| Lunala |

| Nihilego |

| Buzzwole |

| Pheromosa |

| Xurkitree |

| Celesteela |

| Kartana |

| Guzzlord |

| Necrozma |

| Magearna |

| Marshadow |